Highly scalable, maintainable and reliable applications is the holy grail for any Enterprise Architect; although, hardware availability and high demand processing will restraint the design capacity. Among the questions that come into mind when outlining an architecture for an

- How transactional the data is?

- How many transactions per time unit will the system have to handle?

- Are there any data and time intensive process?

- How many concurrent users will operate the system?

- Will be the users in a disconnected environment; therefore, getting the system to deal with outdated data?

Note that in the first paragraph I use holy grail to describe the pursuit of the three principles of enterprise architectures, some times the answers to the previous questions would lead to a solution that will attempt against one or many of the principles of scalability, maintainability or reliability; therefore, it becomes a holy quest that many would consider impossible to reach. For example take a highly transactional web application, by definition highly transactional means data is updated following ACID principles ( Atomic, Consistency, Insolated, Durability), and by being a web application it has to work in a disconnected environment; thus, if two user are modifying the same data at the same time one of the two is guarantee to have outdated data.

Many of the problems in terms of data consistency have to be address during architecture design or can be address with a re-architecture of a system, if for some reason where not taken into consideration the first time. Once the factors inside the scope of data management and processing are addressed, the scalability of the system comes into play. Think about the scalability as your main line of defense against user demands for processing, if your system depends on a single player (a single piece of hardware) you will get a bigger player (better hardware) in an attempt to stop user progress (performance degradation), as show bellow:

As you can notice a team of one would be outperformed if the user offensive grows beyond their strength; therefore, changing the defense by adding some help to our star player and organizing the defense in a pyramidal approach as shown below, will still depend on the correct organization and will be eventually overwhelmed by the offensive if one the defense players fails and moreover if the one failing is our star player.

For us looking to win the super bowl of scalability; linear scalability should be our secret play; we should not depend on one star player, but in a team that can growth in number to stand the user offensive, all players should be the same and they all shall stand side by side with their peers and if one fails the next ones can close the gap leaved by their team mate; take a look into the following diagram on linear scalability defense play. If know our system as good as you should; you can know how many user hits the player on defense can handle; therefore, if having a projected growth of the offensive, we know a priori how many new defense players we have to get into play.

Systems looking to get linear scalability into their game strategy, should be architecture in such a way that allows linearity on their processing, in terms of hardware, the linearity can be handle in home adding more hardware on your own data center or it can be handle by acquiring services for hardware gridifiying company; we will talk about those later. In terms of processing, there are several solutions out in the market about process gridifiying and we will look into one open source for Java in quick demo next. The framework presented here is http://www.gridgain.com/, is a solution that requires no configuration, the code gets deployed automatically in the nodes of the grid and executed transparently as it where in a single JavaVM.

1. Get data from database, we are going to get the list of customers from a Northwind database in a Microsoft SQL Server 2005 using JPA.

3. In the split method we create a job for each customer in the task and override the execute method for the job and add our processing logic inside.

4. In the reduce method we get all the results and aggregate the data back into a new List

4. In the reduce method we get all the results and aggregate the data back into a new List

5. To test the tasks are performed by different nodes in the grid two more instances were created, plus the one being created when the application runs. Therefore, our grid topology will show three nodes, sharing the same CPUs and Network interface.

6. When we run this demo we can see jobs being executed simultaneously in different nodes and finally in the main node we can see the aggregated data with the changes made to the customer regions. If you look into the Customer IDs is easy to notice the Customers being process by the nodes are different.

6. When we run this demo we can see jobs being executed simultaneously in different nodes and finally in the main node we can see the aggregated data with the changes made to the customer regions. If you look into the Customer IDs is easy to notice the Customers being process by the nodes are different.

All nodes in our topology should get the same amount of jobs; depending in its load; therefore, linear scalability. The load will be evenly distributed in our grid if we need more processing will be just matter of adding new nodes; however, more nodes will require; eventually; more hardware, adding the extra hardware could be prove difficult due company policies and procurement delays; moreover, hardware gets outdated quickly and the investment in your datacenter fades away.

Hardware has become a simple commodity what really matter is processing, bandwidth and storage, many companies decided to outsource their entire datacenter; although, there is a new trend into managing datacenters, it goes beyond getting your hardware out to someone else’s facility but to virtualize the entire IT infrastructure; these could be bad news for your infrastructure team; no more wiring, no more A/C problems, no more midnight maintenance, no more corrupted backups, and a lot of other “no more”.

In a virtual world everybody is happy; all the hassle is taken away from your server setup and maintenance, in matter of minutes you can have your own infrastructure; systems can growth beyond the projected demand by a click of a button and can be downsized when the waters come back to its channel. We get an Infrastructure that can growth with the minimum impact at the minimum cost; even better; we can return the processing and storage capacity when is needed no more. The best thing about it is that we get charged for what we use; meaning; that we will pay for the CPU minutes, the terabytes we store and the bandwidth we use.

Having someone so big (the virtualization company) dealing for better prices in servers, storage and bandwidth will translate in better and better prices for our systems, think outside de box, even the Staging and QA environments for your development teams can be virtualized; whenever a new project requires Staging and QA we can add two new servers; once again; with the click of a button.

There are two big companies out in the market for this kind of services, with different approaches:

- Google with http://code.google.com/appengine/ it allows run your applications in Google infrastructure it has several limitations the worth of mention is that Python is the only supported language and on top of that it is still on beta; it has some benefits if you want to interact with other Google services; although, this is not very helpful if you have applications in production already.

- Amazon with their web services ( http://aws.amazon.com/ ) this services allows access to Amazon infrastructure, it is a virtual environment to deploy any range of applications and supports deployment of different Operative System (with different amounts of effort in each case) and on top of that any application written in any language.

Finally I came across a third company that offers yet another different approach to scalable systems, I like this one more as an option for those having systems in production, it provides virtualization of Windows and Linux servers in a single infrastructure, very much alike any Enterprise Environment we have already, the company is http://www.gogrid.com/ they provide a web interface to manage the entire infrastructure allowing to add servers, load balancers, storage and private networks, pretty much as if your had physical access to the hardware.

Even though some recommend Amazon and Google services for those having a new business ideas coming from the Web 2.0; in the other hand, GoGrid would be a good alternative for those having systems that can be easily scale depending only in the availability of hardware.

System willing to take advantage of the new trend in datacenters should architect their systems in such a way that it can be easily scale; either by using processing grids solutions such as the one presented before for Java or some alternative of an “in house” architecture design to allow fast and easy deploy in a grid-enable environment.

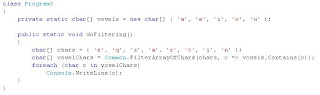

Here we have created a Log partial class that writes a log entry whenever the DoLog() method is executed. This class contains a PreLog and a PostLog partial methods, which for the moment doesn't have any functionality. This code compiles fine, and if we call the DoLog() method, the string "Doing my logging." will be the only thing printed to the console. The story changes if we add the following code to the mix:

Here we have created a Log partial class that writes a log entry whenever the DoLog() method is executed. This class contains a PreLog and a PostLog partial methods, which for the moment doesn't have any functionality. This code compiles fine, and if we call the DoLog() method, the string "Doing my logging." will be the only thing printed to the console. The story changes if we add the following code to the mix: In this other partial class we are adding functionality to the Pre and Post partial methods we defined in the first partial class. Now that we have some code for both methods it will be executed. This means that whenever the DoLog() method is called, the following will be printed to the console:

In this other partial class we are adding functionality to the Pre and Post partial methods we defined in the first partial class. Now that we have some code for both methods it will be executed. This means that whenever the DoLog() method is called, the following will be printed to the console: