Along with the release of Visual Studio 2008 some months ago, there has been a lot of complementary tools being developed by some teams at Microsoft; one of these tools is the ASP.NET 3.5 Extensions which provides new functionality being added not only to ASP.NET 3.5 but to ADO.NET 2008.

Some of these features contempled in this pack are, for instance, new silverlight controls, ADO.NET Data Services, ADO.NET Entity Framework, ASP.NET AJAX back button support, ASP.NET Dynamic Data and last but not least the ASP.NET MVC Framework.

Before we move forward, it's important to mention that this new fuctionality is in a "preview" state and therefore it is not officially supported by Microsoft.

Now, let's concentrate on the ASP.NET MVC Framework.

The MVC is a framework methodology that divides the implementation of a given application into three component roles: models, views and controllers.

"Models" are the components of the application that are in charge for maintaining the state of the aplication. It could be persisting the state in a database or in memory.

"Views" are the components in charge for displaying the application's user interface. Almost always the UI is a representation or reflection of what the model data does.

"Controllers" are the components in charge for handling the user interaction, manipulating the model and lastly choosing a view to render.

Main features of the MVC Framework:

- It doesn't use postbacks or viewstate. In other words, this model is not attached to the traditional ASP.NET postback model and page lifecycle for interactions with the server. All the user interactions are routed to a controller class.

- It supports all the existing ASP.NET features such as output and data caching, membership and roles, Forms authentication, Windows authentication, URL authorization, session state management and other areas of ASP.NET.

- It gives support to the use of existing markup ASP.NET pages (.aspx files), user controls (.ascx files), and master page (.master files) as view templates.

- It contains a URL mapping component that enables you to build applications with clean URLs. The URL routing feature explicitly breaks the connection between physical files on disk and the URL that is used to access a given bit of functionality. This also helps the search engines. For instance, rather than access http://localhost/Products/ProductDetail.aspx?item=2 you now use http://localhost/Products/LaysFrieds.

- Everything in the MVC framework is designed to be extensible. You can create your own view engine or URL routing policy, just to mention a couple.

- Separation of the application tasks such as UI logic, input logic and business logic as well as testibility and test-driven development (TDD). Due to the loosely coupled model, running unit tests is quite easy.

Creating a simple ASP.NET MVC ApplicationWe'll create a basic application that displays a list of Products based on the category and subcategory chosen by the user. This sample will hopefully clear things up and set a way to start digesting this new ASP.NET feature.

So, let's get started.

Using Visual Studio 2008 let's create a project of type ASP.NET MVC Web Application.

After some seconds you'll get an already working project template that has a skeleton with a default page, also an index and about page.

The default project template will look like this:

From now on you're all set to start working and modifying to your needs this simple template. Next, what we'll do is to create all the "Model" logic associated with our sample. All this logic should be placed in the Model folder defined by the template. For this sample I'm using the ADO.NET Entity Framework shipped in the same package as the ASP.NET MVC Framework. This will speed things up and it'll let us have a Data Access component up and running very quickly.

I'm using the AdventureWorks database shipped with SQL Server 2005 and from there I'm only using three tables: ProductCategory, ProductSubCategory and Product.

The model created by the Entity Framework looks like this:

One important thing to remember is the routing model being used. Since this is a simple application I'm ok on using the one proposed by default by the project template which looks like the image below.

What this is telling us is that rather than go with http://localhost/Products/SubCategories.aspx?id=2 as the URL for accessing a page, I'll respond to http://localhost/Products/SubCategories/2.

Now that we're done with the Model, it's time to start coding the Controller that will act as the interperter and handler between the model and the view. We could say this is the heart of the framework. Application and data retrieval logic should only be written inside controller classes. Controller classes then choose which view to render.

Let's then add a new file type of type MVC Controller Class underneath the folder named Controllers.

From there we start defining all the methods that will interact with our view.

A way to define these methods is as follows:

To make this post short, I'll only explain one of the three needed methods to have this application running as expected. Hopefully you'll get the idea and it'll be no problem implementing the others.

What we do is to define an attribute (ControllerAction) for all those methods that will act as a controller. Then we implement the logic and after that we call the RenderView method that passes the data and calls the view.

Finally, let's get into the view. No rocket science here. What we do first is to add a new item of type MVC View Content Page. This type of view will ask us for a Master Page. If you haven't notice it yet, there's a folder named Shared inside the Views folder. In this folder we put all the views that are common to the application and eventually could be reused.

After adding the view, notice that the page inherets from System.Web.Mvc.ViewPage base class. This class provides some helper methods and properties. One of these properties is named "ViewData" which provides access to the view-specific data objects that the Controller passed as arguments to the RenderView() method.

In order to access the data in ViewData we need to make the page inherets from ViewPage<T> where T is a strongly type, in this case, a List of Product Categories.

This guarantee us to get full type-safety, intellisense, and compile-time checking within the view code.

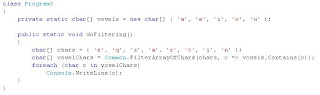

In the HTML part of the view we need to iterate through the ViewData object and use the ActionLink method of the Html object provided by the ViewPage class. The ActionLink method is a helper that generates HTML hyperlinks dynamically that link back to action methods on controllers.

Let's write a foreach loop that generates a bulleted HTML category list like the image below.

Conclusions

ConclusionsFirst of all, the MVC framework doesn't come as a replecement for the WebForms and its Page Controller Model. This is more an alternative way for those looking to implement the MVC approach. The fact that It'll facilitate you to develop a more clean application code as well as loosely coupled application with the adventage that this gives in terms of testability and test driven development is amazing.

Now, one thing that, in my opinion needs more improvement is related with the way data is displayed. Having to iterate using in-line code and is not one of my favorites things to do. This reminds me of the spaghetti programming code that came along with the ASP programming model.

Definately, there needs to be a more enhanced version of the ASP.NET MVC Framework that hopefully will act as those bindable, easy to use server controls available with the ASP.NET WebForms model.

As of now, without a doubt, it's a promising framework that we'll certainly keep an eye on.